|

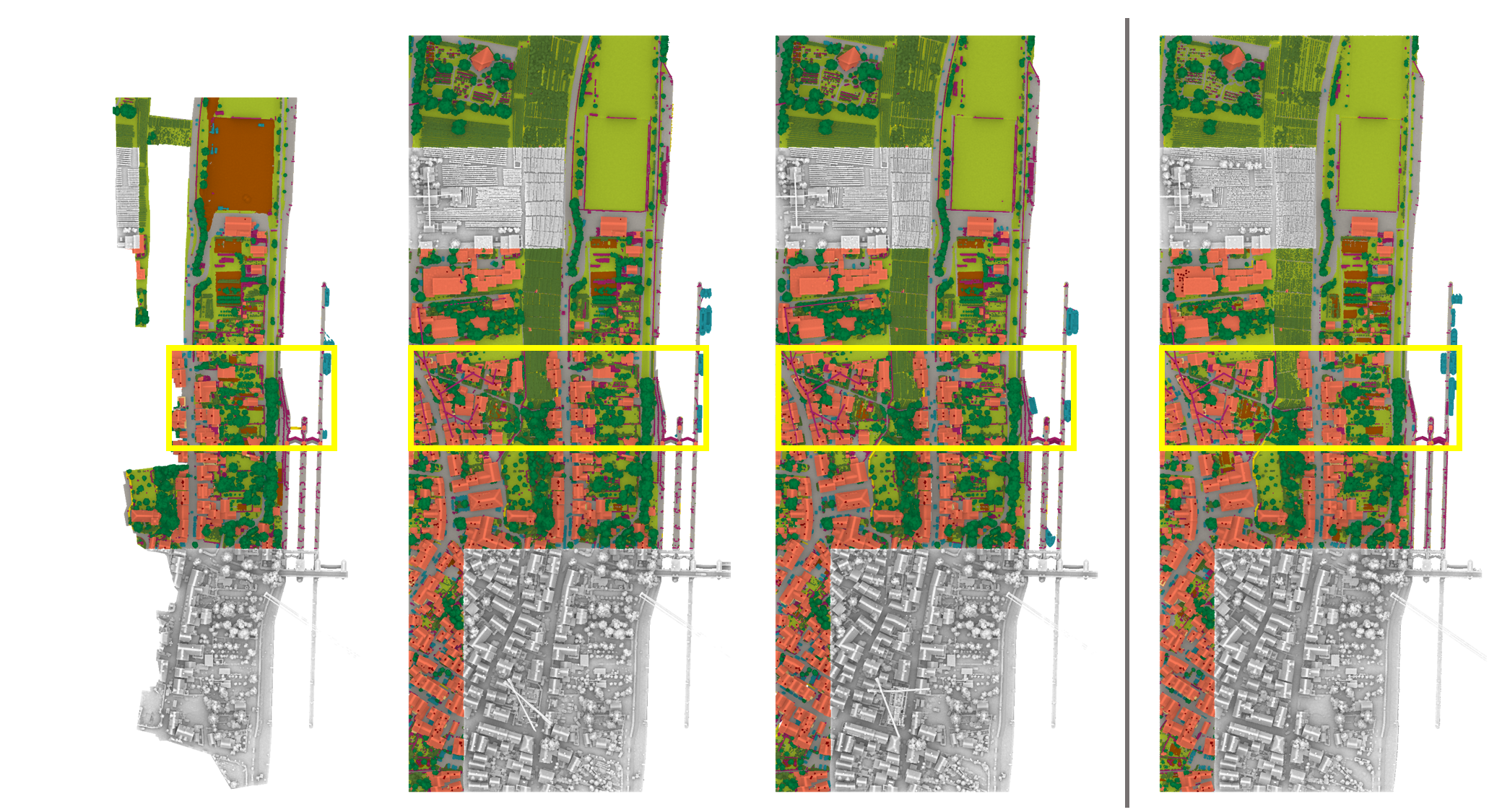

| Partition of the H3D(PC) dataset (epoch March 2018) into training (colored by class colors), validation (colored by class colors and marked by yellow box) and test set (grey). Data splits of H3D(Mesh) are identical but organized in tiles. North points to the right. |

Data sets of each epoch are split into a distinct training, validation and test area for both representations (see Figure above). The splits are congruent in both modalities and in accordance with the mesh tiling. Consequently, the training and validation labels may be used for training, while labels for the test set will be kept sealed.

We would like to encourage researchers to participate in this benchmark by testing their method on H3D. Precisely, if participants intend to take part in the evaluation process, we ask them to submit their predicted labels for the test area as simple ASCII file either for H3D(PC) or H3D(mesh) (columns [X, Y, Z, classification]). For the mesh, XYZ refers to the Centers of Gravity (CoG). The point order of this file needs to be identical with the provided test file. Submissions with deviating point ordering or additional columns will be rejected immediately.

Evaluation is done by comparing results received from participants to the ground truth labels. For this purpose normalized confusion matrix, overall accuracy, F1 scores and mean F1 score will be derived, which will be i) returned to the participants and ii) made publicly available in the context of benchmark ranking on our website results. Participants are also asked to provide contact details and information on their applied methods i.e. by a short description or link to a recent publication of their approach.

We would like to stress that we allow multiple submissions for the same authors only if the approaches are different, i.e. repeated submission of results from the same method with differing parametrization will be refused.

Partition of the dataset for both H3D(PC) and H3D(Mesh) is consistent over all epochs.

Get H3D now

Subscribe here to get the test data and updates about the benchmark

Submit your result

As of November 2024, ground truth data will be available for the test set of all epochs and modalities.

The submissions will therefore no longer be evaluated by the H3D team. We thank all participants for their contributions!

How to cite

@article{KOLLE2021100001,

title = {The Hessigheim 3D (H3D) benchmark on semantic segmentation of high-resolution 3D point clouds and textured meshes from UAV LiDAR and Multi-View-Stereo},

journal = {ISPRS Open Journal of Photogrammetry and Remote Sensing},

volume = {1},

pages = {11},

year = {2021},

issn = {2667-3932},

doi = {https://doi.org/10.1016/j.ophoto.2021.100001},

url = {https://www.sciencedirect.com/science/article/pii/S2667393221000016},

author = {Michael Kölle and Dominik Laupheimer and Stefan Schmohl and Norbert Haala and Franz Rottensteiner and Jan Dirk Wegner and Hugo Ledoux},

}

https://doi.org/10.1016/j.ophoto.2021.100001